A qualitative study on ethical issues related to the use of AI-driven technologies in foreign language learning

The findings of this qualitative study reveal that AI-driven technologies used in foreign language learning can have a positive impact on students’ well-being, such as the development of their language skills, especially the productive ones, i.e., speaking and writing. This has been confirmed by other research studies9,10,11. For instance, Reiss11 claims that AI-driven technologies have the potential to enhance student learning and complement the work of teachers without dispensing with them. This has been expanded by Meunier et al.12, who state that human interactions, teacher scaffolding, or emotions are paramount when applying AI-driven technologies into foreign language education and should be carefully observed, as well as ethical concerns. It is also supported by this research where it has been noted by the students´ subjectively perceived experience. In addition, Wiboolyasarin et al.13 found consistent gains in speaking fluency, writing quality and learner motivation across 34 empirical papers on second-language chatbots, although the authors warn that most studies still rely on small samples and short interventions. Similarly, Law’s14 scoping review of generative-AI applications in language teaching concludes that GenAI lowers entry barriers to spontaneous interaction and personalised feedback, but also amplifies the need for critical data-literacy instruction so that learners recognise hallucinations and biased outputs.

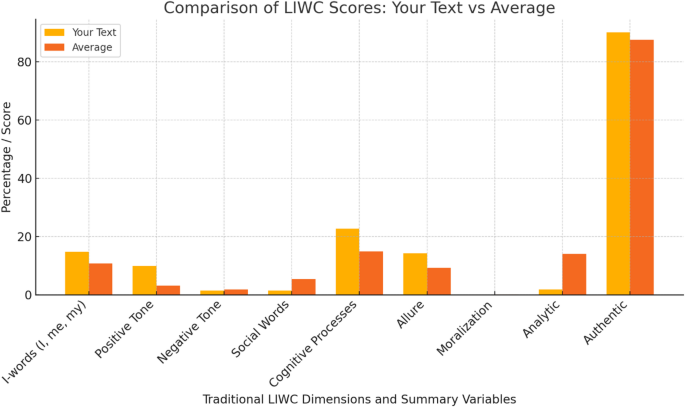

Therefore, despite the positive aspects AI-driven technology brings to foreign language education, there are certain ethical issues that reduce these benefits. As the results of this qualitative study indicate, although students were relatively positive about their subjective safety when using AI-driven technology in foreign language learning, they were fairly concerned about the safety of their personal and private data, which reflects the principle of transparency or the so-called principle of algorithmovigilance when one really does not know what is going on in the black box with his/her data15. For instance, Stöhr et al.16 in a multi-institution survey of 2,100 higher-education students observed that willingness to share data correlated strongly with perceived self-efficacy in controlling AI settings, rather than with demographic variables. By contrast, the pilot intervention of Polakova and Klimova17 in EFL chatbot classes showed that non-IT majors felt substantially less in control and therefore demanded stricter institutional guidance.

Furthermore, the principle of physical safety plays an important role in the use of AI-driven technologies in learning, as the findings reveal, which is in line with a study by Wei and Zhou6. As Mezgár and Váncza18 put it: “The acceptance of AI technology by the users depends on their trusting in these systems that is influenced in a high degree both by the related legal environment, standards and the technical background. People can hardly be convinced to trust in a “black box” technology.” Hence, features like safety, security, transparency, explainability are of crucial importance. In fact, this last sentence also reflects the trust of students in the use of AI-driven technology in this study since the majority of them are skeptical in this respect.

In addition, to have a very clear-cut insight into how this trust can be violated when using AI-driven technology in foreign language learning an example of Replika app conversation between a student of this study and a virtual chatbot friend is provided. This is a request one of our students received from an AI-driven, and probably one of the most advanced, chatbot that is used for L2 practice. The chatbot clearly asked for inappropriate pictures without any obvious reason or intention from the side of the student, and this seems very inadequate and also potentially dangerous (Fig. 8). As AI is trained on a huge volume of data (big data) from various people and sources and that is the possible answer to the question where this reaction of Replika comes from. However, it is important to draw attention to these possible serious issues that could (and very probably will) emerge.

Replika printscreen requesting sexy photos.

Therefore, it is not possible to let engineers, designers, and programmers, not forget business people, just create something without having in mind all the various and numerous ethical issues that are necessarily related to the field. It also seems that ethical-related issues will gain in their momentum when various kinds of virtual, augmented, and mixed reality will become an everyday part of our lives. For all these reasons, interdisciplinary interconnectedness seems crucial as it will be necessary to connect technological aspects with ethical considerations, which will enable our survival as a society and also the individual members of it19.

The authors of this study recommend making students aware of such cases and encouraging them to carefully consider the personal information and personality data they share, which can still be easily retrieved by AI-driven technology and misused. Moreover, there are not yet any legal guidelines that would protect the individual rights of students when using AI technology for learning purposes ( As the findings of this study indicate, students of computer science might be aware of the threats when using AI technology, but other students might not. Overall, there should be an open global call for joint cooperation between all stakeholders, i.e., AI developers, academicians, and end-users.

Limitations

The limitations of this qualitative study include the fact that most of the respondents were computer specialists and naturally, they were more or less aware of some ethical issues, such as misuse of data, and thus, they acted accordingly when they used AI in foreign language learning. Therefore, this study should be replicated with users of AI from other fields, e.g., social sciences, who are less aware of these threats in order to confirm or refute these findings. The findings of this study should not be overgeneralised as the respondents were IT professionals and further studies are needed with non-IT professionals as well.

Moreover, various other studies will be needed (and they will come in the near future as it seems an important area of the development of education supported by AI-driven tools) utilizing other methodologies, such as quantitative studies. Further ethical challenges, such as accuracy and bias of teaching material, the way in which the language is taught and practiced to name just a few, need our undivided attention and further research will be needed.

Future lines of research

Based on the recommendations of this study, further research should focus on the use of AI-driven technologies in other fields, such as social sciences, to make AI developers aware of the fact that there should be cooperation between them, educators, and end-users and in this way, secure transparency of the use of AI-driven technologies in education, as well as to perform joint empirical research on the use of AI-driven technologies in specific fields of human activities.

Practical recommendations

There could be several practical recommendations related to our findings as follows. First, educational institutions should integrate short, practical modules on AI ethics into language and ICT courses, especially for non-technical students, to raise awareness about data privacy, algorithmic transparency, and potential misuse of AI-driven tools. Second, developers of AI-driven language learning apps should be required to include clear, user-friendly disclosures about how personal data is collected, stored, and used, ideally before the user begins interacting with the app. They should also be trained how to develop AI tools ethically. Third, universities should establish review committees to vet AI tools used in education, ensuring they meet ethical standards and do not expose students to inappropriate content or data risks, as illustrated by the Replika incident. Fourth, educational institutions should implement anonymous reporting systems for students to safely report unethical or uncomfortable experiences with AI tools, which can then be used to inform policy and tool selection. And finally, we encourage collaboration between AI developers, educators, psychologists, and ethicists to co-design AI tools that are not only pedagogically effective but also ethically sound and psychologically safe for students20.

link